利用docker搭建ELK

本打算只有elastisearch、kibanan、logstash搭建下ELK,奈何logstash是运行太占内存,于是打算用filebeat(占用资源少)来在各服务器来收集日志,然后统一交给logstash来处理过滤。

docker部署elasticsearch

拉取镜像

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.5.1

运行es镜像

docker run -dit --name es -p 9200:9200 -p 9300:9300

-v /home/es/data:/usr/share/elasticsearch/data

-v /home/es/plugin:/usr/share/elasticsearch/plugin

/usr/share/elasticsearch/config:/usr/share/elasticsearch/config

docker.elastic.co/elasticsearch/elasticsearch:7.5.1

-p 9200 9300 端口映射

-v 存储映射 容器删除数据仍存储在宿主机

/usr/share/elasticsearch/data 数据

/elasticsearch/config:/usr/share/elasticsearch/config 配置

/usr/share/elasticsearch/plugin 插件 例如ik分词器

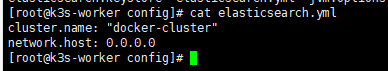

es配置

docker部署kibana

拉取镜像

docker pull docker.elastic.co/kibana/kibana:7.5.1

运行kibana镜像

docker run -dit --name kibana -p 5601:5601

-e ELASTICSEARCH_HOSTS=http://es:9200/

-v /home/kibana/config:/usr/share/kibana/config

docker pull docker.elastic.co/kibana/kibana:7.5.1

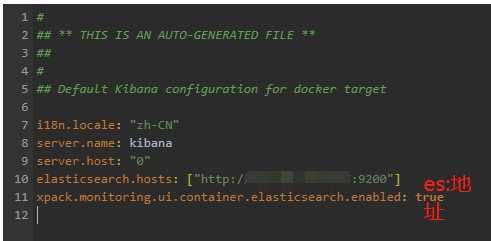

kibana配置,启动命令or配置文件二选一

docker部署filebeat

拉取镜像

docker pull docker.elastic.co/beats/filebeat:7.5.1

运行filebeat镜像

docker run -dit --name filebeat

-v /home/logs:/home/logs

-v /nfs/filebeat:/usr/share/filebeat/

docker pull docker.elastic.co/beats/filebeat:7.5.1

/home/logs 必须映射日志存储位置

/usr/share/filebeat filebeat目录 如何获取目录?

可以先docker run -dit --name filebeat -v/home:/home docker.elastic.co/beats/filebeat:7.5.1 #-v随便映射宿主机的一个路径,方便拷贝

然后docker exec -it 容器id /bash/bin

cp -r ./* /home/

filebeat.yml配置

###加载modules.d中的配置文件

filebeat.config:

modules:

path: ${path.config}/modules.d/*.yml #路径

reload.enabled: false

processors:

- add_cloud_metadata: ~

- add_docker_metadata: ~

##日志来源

filebeat.inputs:

- type: log #类型 log tcp udp等

tags: ["nginx"]

enabled: true

paths:

- /home/logs/nginx/*.log

- type: log

tags: ["halo-executor"]

enabled: true

paths:

- /home/logs/**/*.log

##添加这两行信息,使其能解析json格式的日志, 和 path 对齐

json.keys_under_root: true

json.overwrite_keys: true

###输出到logstash,可以直接输出到es、kafak、redis等

output.logstash:

enabled: true

#logstash的ip:5044

hosts: ["ip:5044"]

#output.elasticsearch:

# hosts: ["es-ip:9200"]

##kibana可视化

setup.kibana:

##kabana的ip,如果有用户名密码需配置用户名密码

host: "http://kibana-ip:5601"

docker部署logstash

拉取镜像

docker pull docker.elastic.co/logstash/logstash:7.5.1

运行镜像

docker run -dit --name logstash

-p 5044:5044 -p 9600:9600

-v /nfs/logstash/config:/usr/share/logstash/config

-v /nfs/logstash/pipeline:/usr/share/logstash/pipeline

docker.elastic.co/logstash/logstash:7.5.1

详细配置文件

-

/config/logstash.ymlhttp.host: "0.0.0.0" xpack.monitoring.enabled: true ##elasticsearch.host es所在机器的ip xpack.monitoring.elasticsearch.host es所在机器的ips: [ "49.232.0.78:9200" ] -

/config/pipeline.yml# This file is where you define your pipelines. You can define multiple. # For more information on multiple pipelines, see the documentation: # https://www.elastic.co/guide/en/logstash/current/multiple-pipelines.html - pipeline.id: main path.config: "/usr/share/logstash/pipeline" -

/pipeline/logstashconf##日志来源 我这里通过端口来之filebeat,也可以来自file,kafa,redis等 input { beats { port => 5044 } } ##自定义日志格式,过滤相关字段等,选配 filter { if "nginx" in [tags] { grok { match => { "message" => "%{IP:remote_addr} (?:%{DATA:remote_user}|-) \[%{HTTPDATE:timestamp}\] %{IPORHOST:http_host} %{DATA:request_method} %{DATA:request_uri} %{NUMBER:status} (?:%{NUMBER:body_bytes_sent}|-) (?:%{DATA:request_time}|-) \"(?:%{DATA:http_referer}|-)\" \"%{DATA:http_user_agent}\" (?:%{DATA:http_x_forwarded_for}|-) \"(?:%{DATA:http_cookie}|-)\""} } mutate { convert => ["status","integer"] convert => ["body_bytes_sent","integer"] convert => ["request_time","float"] } geoip { source=>"remote_addr" } date { match => [ "timestamp","dd/MMM/YYYY:HH:mm:ss Z"] } useragent { source=>"http_user_agent" } } } ##输出部分,我这里通过tags做判断,建立不同的索引 output { if "nginx" in [tags] { elasticsearch { hosts => ["es-ip:9200"] index => "nginx" } stdout { codec => rubydebug } } if "halo-executor" in [tags] { elasticsearch { hosts => ["es-ip:9200"] index => "halo-executor" } stdout { codec => rubydebug } } }

评论区